At Capmo images play a vital role in our customers’ work and within our product. Documentation through imagery is a big part of the daily routine of construction managers. They not only capture the status quo and obtain legal assurance but also use images as a point of reference for collaboration across companies within a construction project.

Naturally, this need translates down to our product having to be able to capture and display images in various forms. Typically, Capmo users upload images either through our mobile applications or directly within the browser. Once captured, we display those images to project collaborators on their mobile devices, browsers, or through generated PDF reports and documents. The heterogeneity of those clients, screen dimensions, and pixel densities motivates a flexible approach to rendering thumbnails as all targets usually have different needs. In this article, we want to discuss our current path toward a dynamic image rendering solution that scales well with our product.

Motivation and status quo

Before thinking about rolling our own solution, we’ve been using imgix (and we partially still are at the time of writing as we are phasing out). imgix is a media visualization platform that allows companies to manage, render, optimize and deliver their media assets through the cloud. For us, the most important part of the value creation is the rendering part. We’ve set up imgix to tap into our S3 buckets and thus make assets accessible through their API. Then, you’d be able to reference assets in the browser and transform them on demand through query parameters.

For example through:

https://<imgix-domain>/<file-uuid-or-secret>/<file-name.png>?w=200&h=200&fit=clip&other-params…

We can then ask imgix to render an image located in one of our S3 buckets of arbitrary size into a 200 x 200 pixels format to display it as a thumbnail. In case the source image is not rectangular, the fit parameter indicates how to fit the resulting image into a square frame. This is done fully on the fly. Once a particular thumbnail is requested for the first time, imgix will render and cache it for subsequent use.

This is just one example of dynamically resizing images of arbitrary origin but essentially the approach allowed us to cater to the display needs of different clients while engineers don’t have to worry to cater for all imaginable types of preprocessing at the time of upload.

Now, if this is working well, why do we even consider building our own solution? The reason for us is the price. Imgix pricing scales by the number of source images rendered whereas transformations are free. At the time of writing, Capmo renders unique source images in a 7-digit range every month, displayed through various transformations. Moreover, while we greatly benefit from dynamic resizing, this is pretty much all we need, as, at the time of writing, we don’t visually enhance images in any way. With on-demand pricing, this translates to about 20k EUR spent per year on image rendering — quite a significant amount, especially compared to what we usually spend on cloud compute in general. This ultimately motivated us to think about rolling our own solution to achieve the same goal at increased flexibility, lower cost, and stricter security measures.

Architectural considerations

When thinking about a suitable architecture, we kept a few things in mind:

Flexibility & coupling

We have multiple product teams at Capmo, most of which deal with images in some way. Be it profile images of users, thumbnails for attachments, or tenant logos. Thus, we wanted to have a flexible approach that can be mixed with other services on the product. Our software already consists of multiple services and those services own the data for their domain, thus we figured the model imgix is exposing would fit nicely and thus we wanted to keep it.

Scale

Since we already have an existing solution in place, it was rather straightforward to inspect the necessary scale. As mentioned previously, we serve origin images on the scale of millions while our origin images to render ratio (or the number of transformations per image per month) is ~1. Furthermore, due to the access patterns of our app, we know that the new service needs to be able to handle spikes in load. This comes from the fact that our app allows our customers to download data for offline use or batch-create reports. In this case, the apps don’t download images in their native resolution, but rather images in a fixed, high resolution to control storage space. This download process produces quite a bit of load within a short timeframe; thus, the service would need to handle a significant amount of renders in parallel if none of them were cached. At Capmo we already have experience rendering at a large scale, so the problem at hand, seemed like another perfect use-case for serverless computing with AWS Lambda.

Reading recommendation: Check out our post on highly parallel tile-map generation with Lambda.

Security

Going forward, we also wanted to use this opportunity to enhance the security of asset delivery within our system and considered that when designing the service. The goal is to have asset-scoped URLs which can control access on a highly granular level.

Implementation

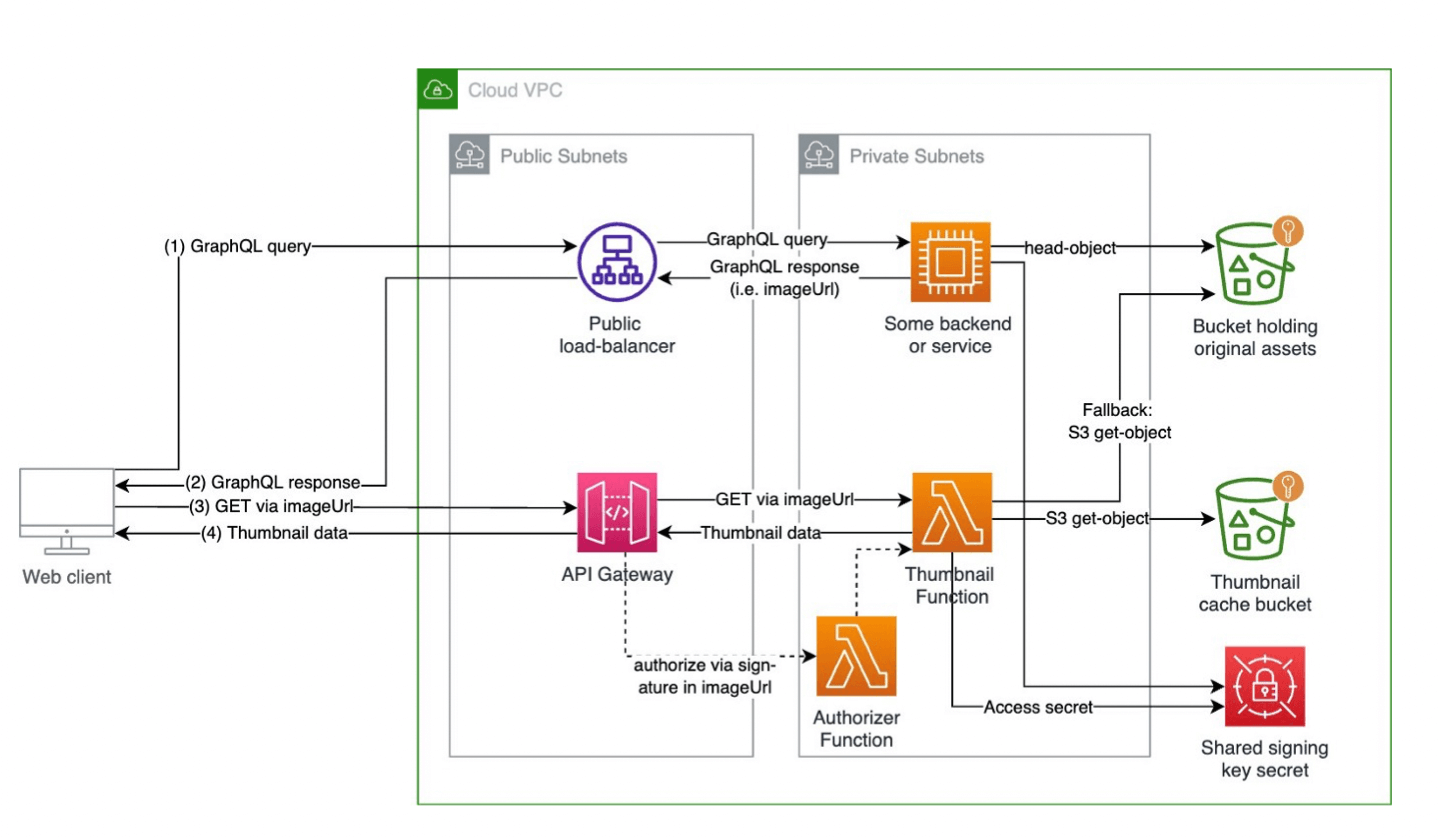

The first iteration of an architecture for our new thumbnail rendering service is depicted below:

A client requests some structured data from a GraphQL API (1). The server responds to this request (2) and generates thumbnail URLs as part of the response via the thumbnail service’s SDK. The client can request the thumbnail via the aforementioned URLs (3) which are delivered by the thumbnail service, either cached or rendered anew.

The base technology for this component is AWS Lambda. This enables us to scale a large number of resources highly dynamically, whereas we don’t pay for unused compute capacity. Since Capmo is a single region product (within the EU) this is particularly advantageous as we usually observe low activity on the product during the night.

Furthermore, we build almost all our services in Typescript, hence it was a natural choice here as well. For image operations, we use sharp and pdf-to-cairo/poppler as native bindings to speed up the rendering process as doing this in JS would be too slow. The libraries are provided through Lambda layers for better composability.

As a framework, we typically build on Nest.js which we adapted for serverless use. While not perfect, this approach allows us to keep a consistent developer experience across different services (we heavily use Nest.js running on containers in other parts of the app) and it integrates well with other tools that we have like Datadog for monitoring and observability or Launchdarkly for feature flags.

The API of our service is pretty much inspired by imgix. We reference an asset using its address (source bucket and key) plus transformation parameters. Upon request, the service fetches the source file (appropriate IAM permissions need to be in place, we built some convenience around that with AWS CDK), transforms the file as per parameters, and returns the result to the client through signed URLs. To reduce compute costs, we implemented naive caching using an S3 bucket and a lifecycle rule. The bucket and its rule are configured such that after N days, an image is deleted automatically. This allows us to balance computing and storage costs and thus optimize accordingly. If an image has been rendered within the last Ndays, the service will thus return the result directly without re-rendering the file.

Rollout

Image rendering is a core part of our product's value proposition, making a proper rollout strategy vital. To reduce the risk surface of our own implementation we use canary deployments. Launchdarkly is our tool of choice here - our API would serve either a URL pointing to imgix or our own custom service, based on a scalar feature flag depicting rollout penetration. This way we can enable a phased release and gradually increase the load our own service has to handle. In case of problems, we can simply turn off the flag in an instant and all the traffic will go through imgix again.

To put meaningful load on the service, we shift 40% of traffic over and give the service a spin. Running this on production for a while allows us to compare the speed and load behavior of our solution vs. imgix.

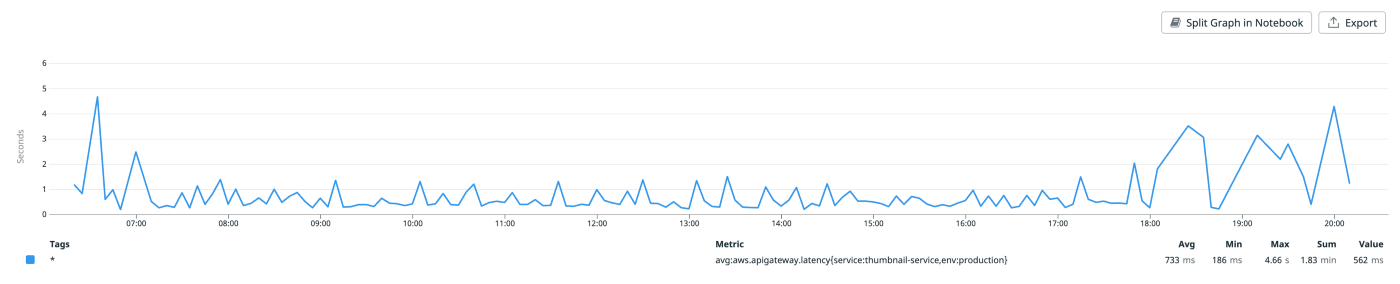

Plotting the API Gateway response time over a single day, we see that the average latency is 733 milliseconds. This is surprisingly close to the average latency of imgix (which only shows it monthly in the dashboard) of 734 milliseconds! Since the average is a poor indicator of user experience, we also investigate the latency distribution (as depicted below):

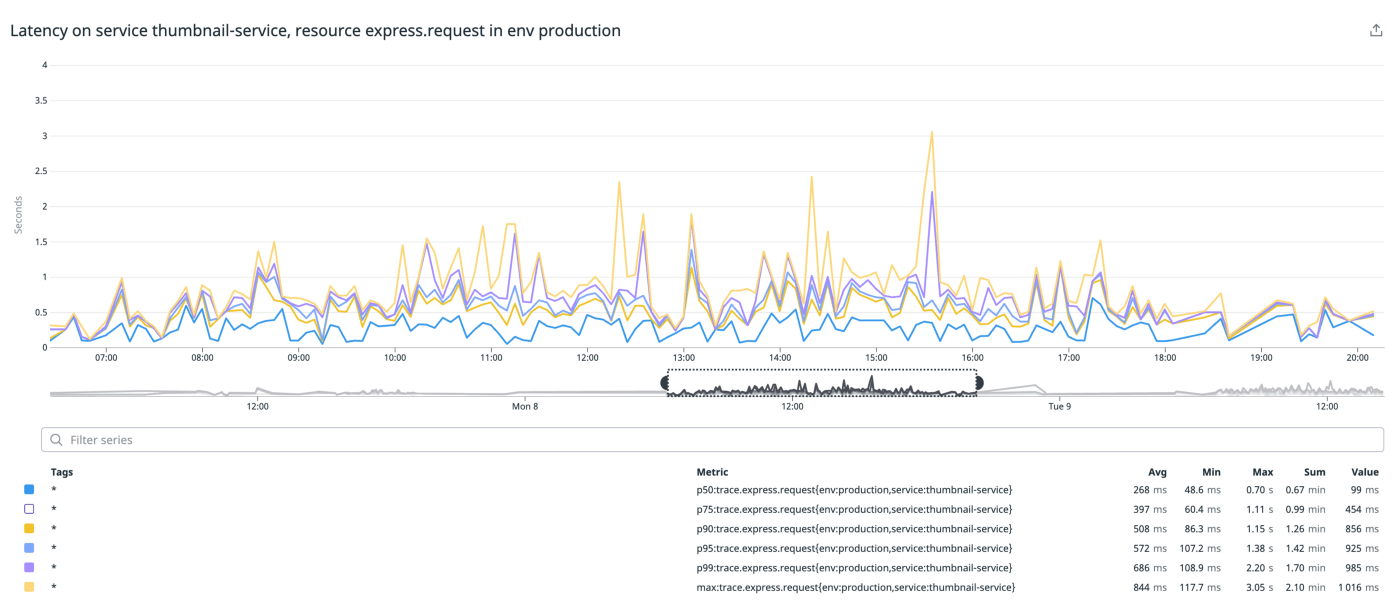

The distribution itself also shows quite a small gap between p50 and p99 latencies, indicating a rather uniform performance distribution across requests. While we see some peaks from presumably large renders, the overall rendering latency is within acceptable ranges and comparable with imgix’s performance baseline.

Security

To prevent random iteration of URLs, our service exposes a tiny TypesScript SDK that allows any client (our API layer in this case) to create signed URLs for this service, including signed image parameters and expiry time. This way we can expose the service to the internet directly. Also, the URLs can be self-contained, making them easy to integrate with report generation tooling for example. Nonetheless, we plan to add an extra layer of authorization on top of it shortly for extra security.

Costs

After a few weeks, we were able to project the cost structure. The majority of the costs for our own service is Lambda runtime (and a little bit of S3 storage). Since thumbnails are small, the S3 storage costs can be neglected. Our initial tests showed that our own solution is 95% more cost-efficient than imgix for this particular use case. To cater for spike load, one could think about providing some provisioned concurrency for Lambda, which would drive down the relative saved costs to about 89%.

Caveats and open topics

This is the first version of our own custom thumbnail generation service and still has some caveats. While we already addressed some of them, a few are still open to be solved:

Supporting new file types like HEIC

The first version of our service does not support thumbnail generation/image rendering for HEIC files which became standard for many mobile operating systems like iOS. Unfortunately, the tooling we picked (sharp) doesn’t support HEIC natively so this is an open end we need to cover.

Bundle size

Running nest.js in a serverless environment has limitations. While it creates a consistent developer experience across stacks and hence development speed, it doesn’t come without drawbacks. One of such drawbacks is that the resulting Lambda function’s code bundle (including dependencies) can become quite large. This comes mainly because nest.js has been designed to handle HTTP requests and to build more complex apps, monolithic even, and thus comes with a lot of dependencies and batteries included. We managed to keep it lean for the service at hand but at some point, there might be no way around using container images to bundle a larger app.

Caching

Our approach to caching is naive because it doesn’t take any access parameters like least-recently-used into account and thus might not be optimal. For now, we accept this fact and rather store thumbnails for a prolonged period of time, maybe even longer than necessary.

Conclusion

As we’ve shown, the architecture above performs well benchmarked against comparable products at a fraction of the cost. Hence, we plan to move the service into production soon and replace IMGIX completely with our in-house solution.

.jpeg)